Do you want to know What is Deep Learning(DL) and Why it is so popular in almost every field? If yes, then read this full article and understand deep learning and its functionalities. At the end of this article, all doubts will be clear.

Hello, & Welcome!

In this blog, I am gonna tell you-

Let’s get started-

What is Deep Learning?

Deep Learning is a subset of Machine Learning. That is, in turn, a subject of Artificial Intelligence. Artificial Intelligence is a technique that allows machines to mimic humans. Machine Learning is a technique to achieve the goal of AI with the help of algorithms to train the model.

DL is a type of machine learning which works based on human brains. In DL, the human brain is called an artificial neural network.

Let’s understand DL in a more precise way and see how it is different than machine learning.

How Deep Learning is different from Machine Learning?

Suppose you have to identify between oranges and Apples. So to perform this task in machine learning, you need to train the model and tell the features of apples and oranges. These features may be fruit color, shape, and many more. So the model will predict the output according to the features told by you.

But,

In DL, the neural network automatically picks up the features without your intervention. That’s the power of deep learning. In DL, most of the work is done automatically by neural networks.

How does DL Work?

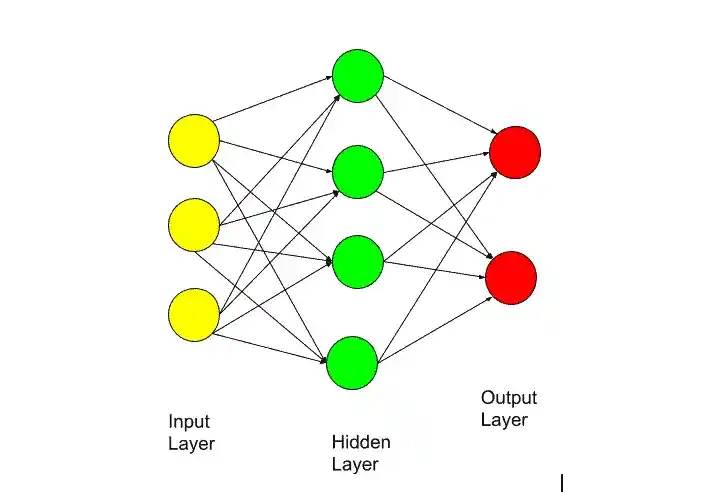

The whole working of DL happens with three layers-

- Input Layer.

- Hidden Layer.

- Output Layer.

As you can see in the picture, deep learning works in the same manner. Each circle you are seeing is a neuron. The first layer is the input layer. Suppose you have to predict something, then whatever data you have is given to the input layer. The last layer is the output layer, which gives the result of your prediction. Like in the example of apple and orange prediction, you will get the prediction as it is orange or apple in that output layer.

In between both layers, there is a hidden layer. This hidden layer is the same as neurons in the human brain. Like when you see something through your eyes, then it doesn’t automatically give the output. First, it processes through billions of neurons, then the result will come. This whole process happens in nanoseconds, so you can’t imagine. This is the whole concept behind the artificial neural network.

First, we feed the input to the input layer, then the input layer transfers this input to the hidden layer. Each neuron has some weight. Each neuron has a unique associated number, which is called a bias. In the hidden layer, the main operation is performed, and after the processing of the hidden layer, you get an output.

Here, it sounds very simple, but in the hidden layer, lots of calculations and operations are performed. And one more thing. hidden layers may be thousand in number. In the picture, I have shown only one hidden layer but it may be a thousand, depending upon the problem you are solving.

Why Deep Learning?

The main three reasons for using DL are-

- The huge amount of Data.

- Complex problems.

- Feature Extraction.

1. The huge amount of Data-

The very first reason to use deep learning over machine learning is a huge amount of Data. Machine Learning performs well in small-size data, but when you supply a huge amount of data to the model, machine learning algorithms fail to solve the problem. That’s why DL comes into this place. DL can easily solve the problem no matter what the size of the data.

In short, DL can deal with huge amounts of data, but machine learning can’t. DL can deal with both structured as well as unstructured data.

2. Complex Problem-

The complex problem is nothing but a real-world problems. Machine learning was not able to solve those problems. Therefore, deep learning comes into the picture. DL can easily solve complex real-world problems. This is another reason why DL is taking preference over machine learning.

3. Feature Extraction–

In machine learning, you need to manually feed all the features related to your problem in order to train the model. Then your model will predict the result based on the feature you fed. So if you have a real-world problem that consists of a huge number of features, then it is time-consuming as well as hard to do.

But,

In deep learning, you only need to give objects or data, no need to feed features manually. DL automatically generates the features of objects or data. DL learns features and then generates those features. And one more thing it generates only high-order features which help to predict the output. This is the biggest reason why deep learning is very popular.

NOTE- When you have a small dataset problem, then you can use machine learning. But when you have a huge amount of data then prefer deep learning.

Best Deep Learning Courses

| S/N | Course Name | Rating | Time to Complete |

| 1. | DL Specialization– deeplearning.ai | 4.8/5 | 4 months ( If you spend 5 hours per week) |

| 2. | DL Course– Udacity | 4.7/5 | 4 months (If you spend 12 hours per week) |

| 3. | DL in Python– Datacamp | NA | 20 hours |

| 4. | Intro to DL with PyTorch– Udacity(FREE Course) | NA | 2 Months |

| 5. | TensorFlow 2 for DL Specialization– Coursera | 4.9/5 | 4 Months( If you spend 7 hours per week) |

| 6. | Generative Adversarial Networks (GANs) Specialization– Coursera | 4.7/5 | 3 months ( If you spend 8 hours per week) |

| 7. | Intro to TensorFlow for DL– Udacity (FREE Course) | NA | 2 months |

| 8. | DL A-Z™: Hands-On Artificial Neural Networks– Udemy | 4.5/5 | 22.5 hours |

| 9. | Professional Certificate in DL– edX | NA | 8 months(If you spend 2 – 4 hours per week) |

| 10. | Neural Networks and DL– deeplearning.ai | 4.9/5 | 20 hours |

| 11. | Intro to DL– Kaggle (FREE Course) | NA | 4 hours |

| 12. | Introduction to DL-edX (FREE Course) | NA | 16 weeks |

| 13. | DeepLearning.AI TensorFlow Developer Professional Certificate– deeplearning.ai | 4.7/5 | 4 months ( If you spend 5 hours per week) |

Where DL is Used?

DL is used in almost every field nowadays. But the most used areas of deep learning is-

- Medical Field- Deep learning is used in the medical field to detect the tumor or cancer cells. How much of the area covered by cancer cells or any more task deep learning is used?

- Robotics- In robotics, you can use deep learning to identify the nearby atmosphere so that robots can walk and react accordingly.

- Self-driving Cars– By using deep learning algorithms, these cars can analyze the environment. Like to analyze the pedestrian, traffic lights, roads, and buildings as an object. After analyzing those objects, the self-driven cars drive. This is nothing but Deep Learning.

- Translation– To translate from one language into another language is possible by using a deep learning algorithm.

- Customer Support– Nowadays most companies are using a chatbot for their customer support. So this chatbot is created with deep learning. It replies and solves your problem in the same way as a human does.

Limitation of DL.

As I discussed, to perform deep learning, you need a huge amount of data to train the model. And most of us have only the necessary amount of data capacity in our system. So all machines don’t have the capacity to deal with massive amounts of Data.

The next limitation is Computational power. DL requires a GPU( Graphical Processing Unit) which has thousands of cores as compared to a CPU. These GPUs are more expensive.

The next limitation is Training Time. The deep neural network takes hours or months to train the model. This training time depends on the data and the number of hidden layers. The more data and hidden layers, the more time it takes to train.

Deep Learning Frameworks

The most popular frameworks of DL are-

1. TensorFlow

- Created by: Google

- Best For: Beginners and experts

- Use Case: From research to large-scale production models

- TensorFlow is one of the most used frameworks in deep learning. It supports building and training models easily, and its high-level API, Keras, makes quick prototyping simple. TensorFlow Lite and TensorFlow.js extend its use to mobile and web applications.

2. PyTorch

- Created by: Facebook AI

- Best For: Researchers and fast prototyping

- Use Case: Computer vision, natural language processing (NLP), and deep learning research

- PyTorch is popular due to its easy-to-understand code and flexibility. It uses dynamic computational graphs, which means you can change your model while building it. PyTorch is a favorite among researchers.

3. Keras

- Created by: François Chollet

- Best For: Beginners

- Use Case: Small to medium-sized projects

- Keras is a high-level framework that works on top of TensorFlow. It’s known for being user-friendly and easy to learn, making it a great starting point for people new to deep learning.

4. DeepLearning4J (DL4J)

- Created by: Eclipse Foundation

- Best For: Java and Scala developers

- Use Case: Enterprise applications and big data tasks

- DL4J is a deep learning framework for Java and Scala. It’s great for businesses that use big data tools like Hadoop and Spark, making it useful in large-scale applications.

5. Caffe

- Created by: Berkeley AI Research

- Best For: Image classification

- Use Case: Fast image processing tasks

- Caffe is well known for its speed in image classification and other tasks using convolutional neural networks (CNNs). It’s often used in research but is not as actively updated as other frameworks.

6. Microsoft Cognitive Toolkit (CNTK)

- Created by: Microsoft

- Best For: Large-scale projects

- Use Case: Speech recognition, machine translation, and large applications

- CNTK is designed for performance and scalability. It can handle large deep learning models, making it ideal for big projects in speech and language processing.

Comparison Table of Deep Learning Frameworks

| Framework | Best For | Main Use Cases | Ease of Use | Performance | Community Support | Key Strengths | Languages Supported |

|---|---|---|---|---|---|---|---|

| TensorFlow | Beginners to experts | Large-scale production models, mobile & web apps | Moderate | High | Excellent | Scalable, supports mobile and web (TensorFlow Lite & JS) | Python, C++, JavaScript |

| PyTorch | Researchers, fast prototyping | Computer vision, NLP, research projects | Easy | High | Excellent | Dynamic computational graphs, highly flexible | Python, C++ |

| Keras | Beginners | Small to medium-sized projects | Very easy | Moderate | Great | User-friendly, quick prototyping (runs on top of TensorFlow) | Python |

| DeepLearning4J (DL4J) | Java and Scala developers | Big data, enterprise-level applications | Moderate | High | Moderate | Integration with big data tools (Hadoop, Spark) | Java, Scala |

| Caffe | Image classification | Fast image processing tasks | Easy | High | Limited but available | Speed for CNN tasks, good for research | C++, Python, MATLAB |

| Microsoft CNTK | Large-scale, high-performance tasks | Speech recognition, machine translation | Moderate | Very High | Good | Scalability, optimized for distributed systems | Python, C++, C#, Java |

Different Types of Deep Learning Algorithms

Deep learning has several types of algorithms, each designed for specific tasks. Let’s look at the most popular ones:

1. Convolutional Neural Networks (CNNs)

- Used for: Image tasks like recognizing faces or objects, and analyzing videos.

- How it works: CNNs look for patterns in images, such as edges or colors, to understand what the picture is showing. This makes them great for image recognition tasks.

2. Recurrent Neural Networks (RNNs)

- Used for: Working with data that comes in a sequence, like sentences or time series data.

- How it works: RNNs remember what happened in previous steps and use that memory to predict the next step. They are good for understanding text, speech, or making predictions based on past data.

3. Long Short-Term Memory Networks (LSTMs)

- Used for: Tasks that need to remember information over time, like language translation or predicting trends.

- How it works: LSTMs are a special type of RNN that can remember information for a long time. This makes them better for tasks like writing sentences or predicting stock prices over time.

4. Generative Adversarial Networks (GANs)

- Used for: Creating new things like images, videos, or music.

- How it works: GANs have two parts: one creates fake data, and the other tries to figure out if the data is fake or real. They are great at generating realistic-looking images or videos.

5. Autoencoders

- Used for: Compressing data, cleaning up noisy data, or detecting unusual things in data.

- How it works: Autoencoders reduce data to a smaller form and then rebuild it. This can be used to remove noise from images or find patterns that don’t belong.

6. Deep Belief Networks (DBNs)

- Used for: Learning important features from data and reducing its size.

- How it works: DBNs are built with layers of networks that learn features step by step. They can help simplify data, making it easier for other algorithms to work with it.

How These Algorithms Help:

- CNNs are great for visual tasks like recognizing objects in images.

- RNNs and LSTMs are useful for working with sequences like text or time-based data.

- GANs are good for creating realistic images, videos, or even sound.

- Autoencoders help compress data and clean up noise.

- DBNs are good for reducing data size and learning important features.

I hope now you have a clear idea about DL, Why it is so famous and its application. If you have any questions, feel free to ask me in the comment section.

Enjoy Learning!

All the Best!

FAQ

Learn How Neural network Works- here.

What is the Activation Function? just read it here.

Read Gradient Descent from here- Gradient Descent- Understand Completely in a Super Easy Way!

Read Stochastic Gradient Descent from here- Stochastic Gradient Descent- A Super Easy Complete Guide!

Are you interested to know How Artificial Neural Network Works, then read this blog- Artificial Neural Network.

Thank YOU!

Though of the Day…

‘ It’s what you learn after you know it all that counts.’

– John Wooden

Read Deep Learning Basics here.

Written By Aqsa Zafar

Founder of MLTUT, Machine Learning Ph.D. scholar at Dayananda Sagar University. Research on social media depression detection. Create tutorials on ML and data science for diverse applications. Passionate about sharing knowledge through website and social media.

Hi

Thank you for the information, I am preparing myself to be Data Analyst. Could you please refer web portal or materials which could help me.

Thank You. You can prefer Udemy.