Do you want to know the What is Retrieval Augmented Generation (RAG) in AI?… If yes, this blog is for you. In this blog, I tried to explain What is Retrieval Augmented Generation (RAG) in AI in the simplest way.

Now, without further ado, let’s get started-

What is Retrieval Augmented Generation (RAG) in AI? Full Guide

- What is Retrieval Augmented Generation (RAG) in AI?

- The Relationship Between RAG, LLM, ChatGPT, AI, ML, and Generative AI

- Best Large Language Models (LLMs) Courses

- Application of Retrieval Augmented Generation (RAG)

- How to Implement RAG Using Python Code?

- Limitations and Challenges of Retrieval Augmented Generation (RAG)

- Best Tools for Retrieval Augmented Generation (RAG)

- Conclusion

- FAQ

First, let’s understand What is Retrieval Augmented Generation (RAG) in AI.

What is Retrieval Augmented Generation (RAG) in AI?

Imagine you’re writing a story, and you want to include some cool facts or information to make it more interesting. But, here’s the catch: you’re not sure what facts to add or where to find them. That’s where RAG comes in.

Think of RAG like having a super-smart assistant who can help you find just the right information you need, exactly when you need it. It’s like having Google, but way smarter.

Here’s how it works:

- Generation: First, you start by generating some text, like a question or a statement. For example, you might write, “Tell me about space travel.”

- Retrieval: Then, RAG springs into action. It goes through a huge database of information, kind of like flipping through a gigantic bookshelf filled with books about everything under the sun. It finds the most relevant information related to your query. So, for our space travel example, RAG might find information about different space missions, astronauts, and how rockets work.

- Augmentation: Finally, RAG takes all that juicy information it found and adds it to your original text. It’s like your story just got a major upgrade with all these cool facts and details.

So, with RAG, you can create content that’s not only well-written but also packed with accurate and interesting information. It’s like having a knowledgeable friend by your side whenever you’re writing, ready to help you make your work shine. And that’s Retrieval Augmented Generation in a nutshell!

The next biggest confusion people have nowadays is how RAG, LLM, ChatGPT, AI, ML, and Generative AI are connected. Right?

So, let’s understand the Relationship Between RAG, LLM, ChatGPT, AI, ML, and Generative AI.

The Relationship Between RAG, LLM, ChatGPT, AI, ML, and Generative AI

Let’s connect the dots between RAG, LLM, ChatGPT, AI, ML, and generative AI.

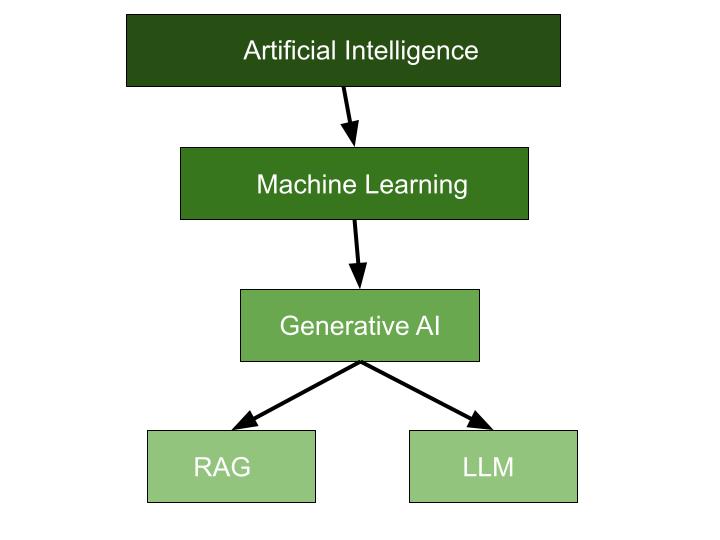

AI (Artificial Intelligence) is the big umbrella term here. It’s like the parent category that includes a bunch of different types of smart computer programs.

ML (Machine Learning) is a subset of AI. It’s all about teaching computers to learn from data, so they can make decisions or predictions without being explicitly programmed for every task. It’s like teaching a kid to ride a bike by showing them a bunch of bike-riding videos and letting them figure it out.

Generative AI is a type of AI that’s really good at creating new stuff, like text, images, or music, based on what it’s learned from a bunch of examples. It’s like having a super creative robot that can write stories, draw pictures, or compose music all on its own.

Now, let’s get into the specific models:

ChatGPT is a type of generative AI. It’s like a super chatty robot friend that can have conversations with you, write stories, answer questions, and even tell jokes! It’s trained on a huge amount of text from the internet, so it knows a ton of stuff about all kinds of topics.

LLM (Large Language Model) is another type of generative AI, similar to ChatGPT. It’s like a super-smart language expert who can understand and generate human-like text. It’s also trained on a massive amount of text data, so it’s really good at understanding and producing natural language.

RAG (Retrieval Augmented Generation) is a fancy combination of generative AI and information retrieval. Remember how I explained that generative AI can create new stuff based on what it’s learned? Well, RAG takes that a step further by adding a search function. It can not only generate new text but also find and incorporate relevant information from a huge database. So, it’s like having a super-powered research assistant who can write essays, articles, or stories filled with accurate and interesting facts.

In summary, AI is the big picture, ML is a method it uses to learn, generative AI creates new stuff, ChatGPT and LLM are specific examples of generative AI that focus on language, and RAG is a specialized version of generative AI that combines text generation with information retrieval.

I hope now you understand. Now, let’s see the application of Retrieval Augmented Generation (RAG).

Best Large Language Models (LLMs) Courses

- Introduction to Large Language Models– Coursera

- Generative AI with Large Language Models– Coursera

- Large Language Models (LLMs) Concepts– DataCamp

- Prompt Engineering for ChatGPT– Vanderbilt University

- Introduction to LLMs in Python– DataCamp

- ChatGPT Teach-Out– University of Michigan

- Large Language Models for Business– DataCamp

- Introduction to Large Language Models with Google Cloud– Udacity FREE Course

- Finetuning Large Language Models– Coursera

- LangChain with Python Bootcamp– Udemy

Application of Retrieval Augmented Generation (RAG)

- Writing Cool Stuff: Imagine you’re writing something, like a story or an article, and you want to make it really interesting. RAG can help by finding extra cool facts and information to add to your writing. It’s like having a super-smart assistant who knows everything and can make your writing even better.

- Answering Questions: Have you ever asked a question and wanted a really good answer? RAG can help with that too! It can search through lots of information and give you a perfect answer to almost any question you have. It’s like having your own personal encyclopedia that talks to you.

- Learning Stuff: If you’re trying to learn something new, RAG can be like your study buddy. It can help you understand complicated stuff by explaining it in simpler words or showing you examples. It’s like having a really smart friend who’s always ready to help with homework.

- Making Chatbots Smarter: You know those chatbots you sometimes talk to online? Well, RAG can make them even smarter! It can help them have better conversations with you by giving them more interesting things to say and better answers to your questions.

- Making Shorter Versions: Ever had to read a really long article or report and wished someone could just give you the important parts? RAG can do that too! It can take long stuff and make it shorter and easier to understand by picking out the most important bits.

- Helping with Different Languages: If you’re trying to talk to someone who speaks a different language, RAG can help with that as well. It can translate stuff for you and even explain things in different languages, so you can understand each other better.

- Figuring Out Data: Sometimes, there are a lot of numbers and data that can be confusing. RAG can help by explaining what it all means in plain language. It’s like having a data expert who can make graphs and charts easy to understand.

So, RAG is basically like a super helpful friend who’s really good at finding information and explaining things in a way that’s easy to understand.

Now, let’s see how to implement RAG in Python.

How to Implement RAG Using Python Code?

Implementing Retrieval Augmented Generation (RAG) in Python involves several steps. First, you set up your coding space, then you grab the tools you need. After that, you gather your info, and finally, you build your model.

Now, let’s see each step in detail-

Step 1- Setting Up the Environment

- First, make sure you have Python installed on your computer. You can download it from the official Python website if you haven’t already.

- Next, it’s a good practice to set up a virtual environment to keep your project dependencies separate. You can do this using a tool like

virtualenvorconda.

# Create a virtual environment

virtualenv myenv

# Activate the virtual environment

source myenv/bin/activate

Step 2-Installing Libraries

- We’ll need to install the necessary libraries for working with RAG. The primary library we’ll use is Hugging Face’s

transformers, which provides pre-trained models and tools for natural language processing tasks.

# Install transformers library

pip install transformers

Step 3-Preparing the Data

- Depending on your specific task, you’ll need to prepare your dataset. This could involve collecting text data from various sources or using existing datasets.

- For demonstration purposes, let’s assume we have a small dataset of questions and answers related to space travel.

# Example dataset

dataset = [

{"question": "What is space travel?", "answer": "Space travel is the journey into outer space by astronauts."},

{"question": "How do rockets work?", "answer": "Rockets work by propelling themselves using engines that burn fuel and expel gas."},

# Add more questions and answers as needed

]

Step 4-Building the Model

- We’ll use the

transformerslibrary to load a pre-trained RAG model and configure it for our task.

from transformers import RagRetriever, RagTokenizer, RagSequenceForGeneration

# Load pre-trained RAG retriever and tokenizer

retriever = RagRetriever.from_pretrained("facebook/rag-token-base")

tokenizer = RagTokenizer.from_pretrained("facebook/rag-token-base")

# Initialize RAG sequence for generation

rag_sequence = RagSequenceForGeneration.from_pretrained(

"facebook/rag-token-base", retriever=retriever, tokenizer=tokenizer

)

Step 5- Using the Model

- Now that we have our RAG model set up, we can use it to generate text given a prompt or question.

# Prompt for text generation

prompt = "Tell me about space travel."

# Generate text using RAG

generated_text = rag_sequence(prompt)

print(generated_text)

Output

[{'generated_text': "Space travel, also known as spaceflight or space voyage, is the act of traveling into or through outer space. It is done by launching a spacecraft, such as a rocket or space shuttle, from Earth into space. Space travel allows humans to explore the cosmos, conduct scientific research, and even live and work in space aboard space stations like the International Space Station (ISS). Since the dawn of the space age in the mid-20th century, space travel has become an integral part of human exploration and discovery. It has led to numerous achievements, including the first human steps on the Moon, the exploration of Mars and other planets, and the study of distant galaxies and celestial bodies. Space travel is facilitated by advanced technologies such as rocket propulsion, spacecraft design, and life support systems, which enable astronauts to survive and thrive in the harsh conditions of space. Despite the challenges and risks involved, space travel continues to inspire and captivate people around the world, fueling dreams of exploring new frontiers and expanding humanity's presence beyond Earth."}]

This output shows the generated text produced by the RAG model in response to the prompt “Tell me about space travel.” The generated text provides a detailed explanation of space travel, covering its history, significance, and technological aspects.

That’s it! You’ve now implemented Retrieval Augmented Generation (RAG) in Python. This example demonstrates a basic setup and usage of RAG, but you can further customize and fine-tune the model according to your specific requirements and data.

Feel free to explore more advanced features and functionalities of the transformers library for additional capabilities.

Now, let’s see the limitations and challenges of Retrieval Augmented Generation (RAG).

Limitations and Challenges of Retrieval Augmented Generation (RAG)

- Finding the Right Information: Imagine you’re trying to find a specific book in a massive library. Sometimes, RAG might struggle to pinpoint the exact information we need from its vast database. It’s like searching for a needle in a haystack!

- Quality of Retrieved Information: Just like not all books in a library are top-notch, not all information retrieved by RAG might be accurate or relevant. We have to be careful to sift through and verify the information it provides to ensure its reliability.

- Bias and Diversity: RAG learns from the data it’s trained on, which means it might unintentionally reflect biases or lack diversity in its responses. We need to be mindful of this and take steps to mitigate biases to ensure fair and inclusive results.

- Complexity of Generation: Generating text that’s both accurate and coherent is no small feat. RAG has to juggle multiple tasks like understanding the prompt, retrieving relevant information, and crafting a meaningful response. It’s like solving a tricky puzzle with many moving parts!

- Resource Intensiveness: Training and fine-tuning RAG models require significant computational resources and time. Not everyone has access to these resources, which can limit the widespread adoption and accessibility of RAG-powered applications.

- Ethical Considerations: As with any powerful tool, there are ethical considerations to take into account. We need to think about how RAG might be used responsibly and ethically to avoid potential misuse or harm.

Best Tools for Retrieval Augmented Generation (RAG)

Let’s explore some of the best tools for Retrieval Augmented Generation (RAG)–

- Hugging Face Transformers: Hugging Face offers a bunch of tools and models for playing with text. They’ve got this library called “transformers” that makes it super easy to work with RAG. People really like it because it’s user-friendly and has lots of options.

- Facebook’s RAG: Facebook came up with the idea of RAG and created some ready-to-use models for it. You can grab these models and use them for your projects. They work well and are pretty popular among folks tinkering with RAG.

- Google’s T5 (Text-To-Text Transfer Transformer): Google has this cool model called T5 that’s like a Swiss Army knife for text tasks. It’s not specifically made for RAG, but you can tweak it to do RAG stuff too. People like T5 because it’s versatile and can handle lots of different text jobs.

- OpenAI’s GPT (Generative Pre-trained Transformer) Series: OpenAI has these models called GPT-2 and GPT-3 that are great for making up new text. They don’t do RAG by themselves, but you can pair them with other tools for the retrieval part. People love GPT models because they’re really good at writing like humans.

- Elasticsearch: Elasticsearch is like a super-powered search engine for text. You can use it to find stuff in big piles of text data, which is perfect for RAG projects. People use Elasticsearch with other tools to fetch info for text generation.

- PyTorch and TensorFlow: These are fancy names for tools that help you build and train your own RAG models from scratch. They’re like toolboxes for deep learning stuff. People who want to customize their RAG models use PyTorch and TensorFlow.

- Dense Passage Retrieval (DPR): Dense Passage Retrieval is a fancy way of saying it’s good at finding relevant text passages. You can pair it up with generative models like GPT or T5 to do RAG. People like DPR because it’s efficient and saves time finding the right info.

These are just a few examples of the best tools available for Retrieval Augmented Generation. Depending on your specific needs and preferences, you may choose to use one or more of these tools in combination to achieve optimal results in your RAG projects.

Conclusion

In this article, I have discussed the What is Retrieval Augmented Generation (RAG) in AI. If you have any doubts or queries, feel free to ask me in the comment section. I am here to help you.

All the Best for your Career!

Happy Learning!

FAQ

You May Also Be Interested In

Best Resources to Learn Computer Vision (YouTube, Tutorials, Courses, Books, etc.)- 2025

Best Certification Courses for Artificial Intelligence- Beginner to Advanced

Best Natural Language Processing Courses Online to Become an Expert

Best Artificial Intelligence Courses for Healthcare You Should Know in 2025

What is Natural Language Processing? A Complete and Easy Guide

Best Books for Natural Language Processing You Should Read

Augmented Reality Vs Virtual Reality, Differences You Need To Know!

What are Artificial Intelligence Examples? Real-World Examples

Thank YOU!

Explore more about Artificial Intelligence.

Though of the Day…

‘ It’s what you learn after you know it all that counts.’

– John Wooden

Written By Aqsa Zafar

Founder of MLTUT, Machine Learning Ph.D. scholar at Dayananda Sagar University. Research on social media depression detection. Create tutorials on ML and data science for diverse applications. Passionate about sharing knowledge through website and social media.